I Ragged the One Big Beautiful Bill into a Chatbot

One of the most common excuses lawmakers give when facing a tough vote is that the bill is massive and they didn’t have enough time to read it thoroughly—yet they vote anyway. It sounds like a student making a lame excuse for missing homework, just before the adults shout back, “Time management!”

Well, with AI, we can finally put that excuse to rest. Large Language Models (LLMs) are incredibly good at reading and understanding complex text—so good, in fact, that they can explain it back to you like you're eight years old. Imagine not having to spend hours poring over legislation and still wondering what you just read. No more waiting for the evening news to cherry-pick and spin the parts they want you to hear.

So, as the President signed the newly passed One Big Beautiful Bill into law on our nation’s birthday this weekend, I ragged the bill text into a chatbot. No more reading assignments. No more guessing games. Why read it when you can just talk to it?

I used the BeautifulSoup library to scrape the legislation text from the official Congress website. With help from GitHub Copilot and Gemini 2.5, I was able to get things moving quickly. But my first attempt to scrape the text failed miserably. Copilot suggested it was likely due to anti-scraping mechanisms on .gov domains. So basically, you—the human—can read the bill in your browser, but your API can’t?

Interestingly, Copilot didn’t just throw in the towel. It persisted, tried a few alternatives, and eventually found another legitimate URL from the Congress web site without scraping protection.

With the full text downloaded—23,000 lines of legalese—I broke it into chunks and vectorized them into a Chroma vector store. This creates a mathematical representation of the bill’s content. The whole concept of vector embedding is absolutely fascinating. Who knew you could convert text into high-dimensional vectors in a way that preserves its semantic meaning—quantifiably and verifiably? To put it simply: the vector for the word “king,” minus “male,” plus “female,” gives you the vector for “queen.” 👑

Once the vector store is built, we can issue queries to it by converting a user’s prompt into a vector. The vector store acts like a semantic search engine, returning the most relevant text chunks based on vector similarity. The most reliable embedding model I’ve used so far is OpenAI’s text-embedding-ada-002-v2.

As the final step, both the user prompt and the retrieved text chunks are passed into the LLM—in my case, gpt-4o-mini. This whole workflow is known as RAG, or Retrieval-Augmented Generation.

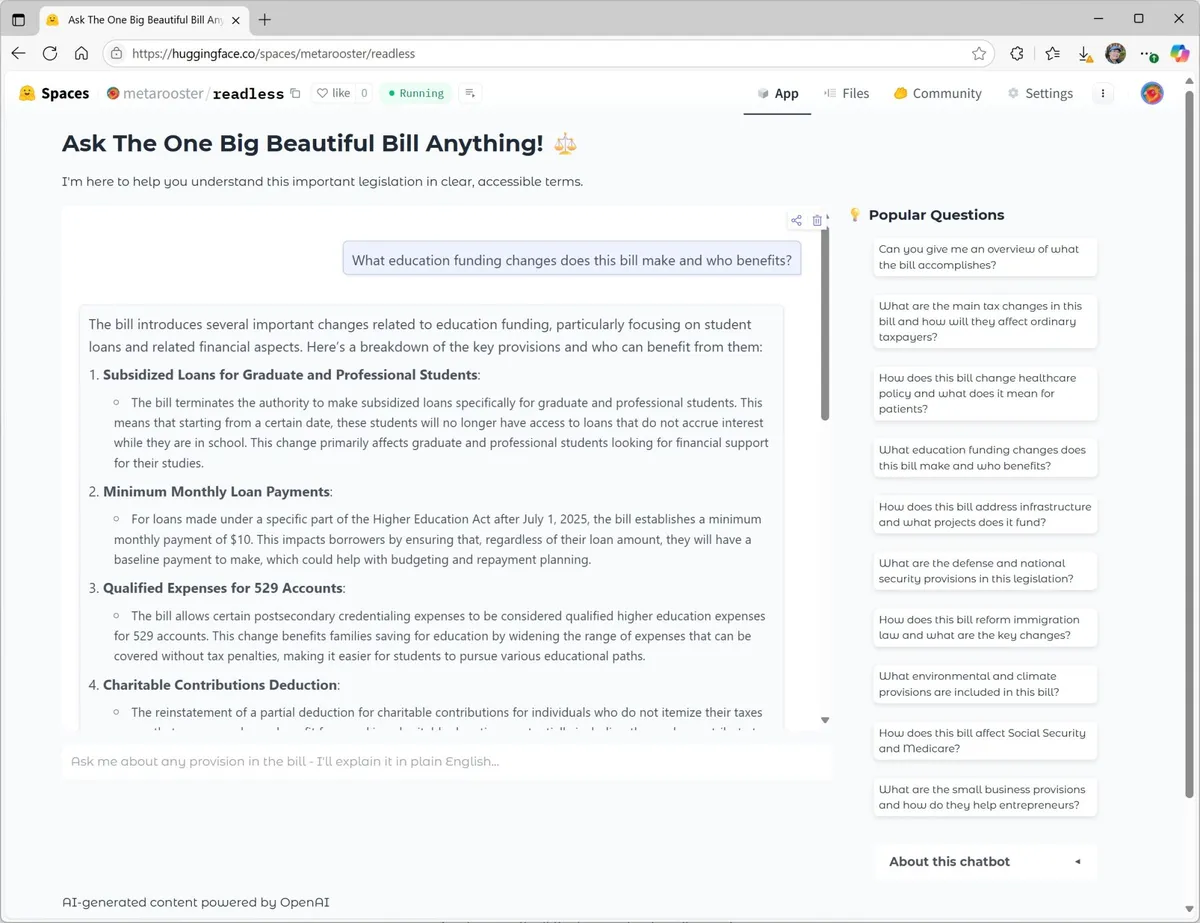

The app is hosted and available free of charge with full source code here: https://huggingface.co/spaces/metarooster/readless

I use it to fact-check news stories, and have discovered some surprising parts of this massive bill I’d never heard of. I hope you too will find it useful.